To meet ambitious workforce development goals, policymakers need to apply evidence-based practices at scale. Policymakers can choose from a growing list of successful evidence-based programs, but they still face uncertainty over whether or not that program will work in a new context. What works in one geographic location or time may fail in another. This is the fundamental problem of expanding evidence-based programs: evidence suggests programs work in a given context, but policymakers may need them to work in a new context in which evidence of success does not yet exist (Williams 2018).

A new discussion paper provides workforce development policymakers with a two-step method to incorporate evidence-based programs at scale. The method is called scale analysis. The paper includes an example of scale analysis, applying the method to a hypothetical metropolitan area that seeks to adopt an evidence-based training program for youth with barriers to employment.

The paper also reviews a sample of recent workforce development randomized controlled trials (RCTs) and reports to what extent this body of research informs policymakers about what works at scale rather than just what works in general. Despite a growing literature that uses rigorous RCTs, the paper shows that many workforce development evaluations have study designs that limit application of their findings to other contexts:

- Most of the studies in the review feature nonrandom samples from the target population.

- Most of the studies use a small "treatment" group of individuals, defined as the group that receives the primary workforce development intervention.

- Most of the studies are conducted in specific geographic locations with unique political, economic, demographic, and institutional contexts.

Scale analysis overview

In workforce development strategic planning, one of the most difficult steps involves going from broad goals to specific programs with numeric targets for success. For example, city leaders in a major metropolitan area request a labor market analysis and find a skills gap in the information technology (IT) sector. The report finds a labor supply shortfall of 1,000 IT workers. How does the group of city leaders choose a combination of programs that can meet this goal? How do they convince community stakeholders and potential funders (such as elected officials, philanthropy, and nonprofits) that the proposal has the potential to succeed?

Scale analysis is a method to help policymakers conduct background research, list assumptions, and test the sensitivity of projected employment outcomes to violations in the core assumptions. The analysis helps policymakers identify weaknesses and strengths in their efforts to develop programs to meet specific program goals and objectives. Scale analysis begins after policymakers choose specific evidence-based training programs. It proceeds in two sequential steps: mechanism mapping and sensitivity analysis.

Mechanism mapping

Mechanism mapping is a method to check assumptions when scaling up a program. Pilot programs typically follow a theory of change, or a sequence of steps that links program inputs (such as instructional faculty) to program outputs (like graduate employment). For policymakers seeking to scale up a program, the relevant question is how the theory of change applies in the new context. Specifically, what assumptions were required for the pilot study to succeed, and are those assumptions likely to hold in the new context?1

In brief, the process involves several simple steps, although the difficulty of the process depends on the complexity of the program’s theory of change. The first step is to identify the program’s theory of change and list the program’s inputs, activities, outputs, intermediate outcomes, and final outcomes. Second, list the contextual assumptions that underlie the causal logic of the theory. What assumptions must be maintained for the program inputs to be available, activities to be performed, and outputs to be produced? What assumptions connect the outputs to the intermediate outcomes, and how do the intermediate outcomes affect the final outcomes of interest?

For example, many workforce initiatives must begin with a simple question about the target population: who and where are the potential workers we seek to train? To answer this question, workforce policymakers must investigate core assumptions about the number of potential workers available, their interest to enroll in the program, and their readiness for training and employment.

Sensitivity analysis

The second step of scale analysis is to examine program assumptions using sensitivity analysis. Sensitivity analysis, which is a common method in applications such as cost-benefit analysis, can help workforce development policymakers in two ways: 1) evaluate the sensitivity of performance projections under different contextual circumstances, 2) communicate the credibility of performance projections to internal and external stakeholders in order to make more informed decisions and increase stakeholder commitment to the program.

Policymakers make numerous assumptions when projecting the impact of workforce programs in a new context. These predictions are more sensitive—or robust—to changes in some assumptions than in others. Workforce programs typically depend on key assumptions about program recruitment, retention, graduation, and postgraduate employment rates. Sensitivity analysis allows policymakers to assess how robust their projections are to changes in these key assumption.

Policymakers should begin this step by performing sensitivity analysis on the assumptions underlying projections about these key outcomes. For example, how does the estimated number of individuals trained and employed change under different assumptions about participant recruitment and enrollment?

Sensitivity analysis helps policymakers quantify this uncertainty by creating a range of projected outcomes under different assumptions. For example, a projection of 100 workers assumes that the unemployment rate is approximately 3 percent, the labor market is tight, and that 100 percent of program graduates obtain employment. Sensitivity analysis asks how that projected number changes if the assumption about the unemployment rate is incorrect. How many graduates will the program place if the unemployment rate increases to 5 percent?

What is scale in workforce development?

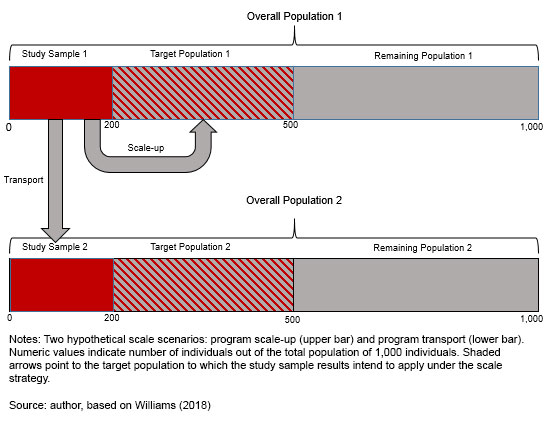

There are at least two ways to frame the discussion about program scale-up. In one sense, scale-up means expanding a successful program to a larger population. In a second sense, scale-up refers to the transport of a successful program from one context like a city to another. The chart illustrates these two ways to represent the scale-up of a program. The initial study sample (Study Sample 1 and 2) is a subset of a target population. The study sample is a random or nonrandom sample from the target population (Target Population 1 and 2); policymakers intend to generalize the study sample results to the target population.

The upper panel of the figure represents the first form of program scale-up: expanding the program to the full target population. The study sample could be a small pilot study, while the target population could be all low-income, unemployed adults in a U.S. state. The grey curved arrow indicates that the goal is to apply the results from the study sample to the entire target population.2

Program Scale-Up and Program Transport

The lower panel of the chart represents the second form of program scale-up: program transport to another target population. The grey arrow pointing straight downwards indicates that the goal is to apply the results from Study Sample 1, which was conducted with a sample from another target population, to a sample from a different target population. Policymakers then intend to generalize the results from Study Sample 2 to the larger population in Target Population 2.

Banerjee et al. (2017) discuss several features of RCTs that may limit scalability: context dependence, randomization/site selection bias, and piloting/implementation bias. Context dependence means that the program’s impact depends on social, economic, geographic, and political characteristics that vary across locations. Randomization or site-selection bias occurs when the study sites or individuals that agree to participate in RCTs are not representative of the population of interest. Piloting bias or implementation bias means that program effects may differ when implemented at a larger scale than the original pilot study. Small nonprofits may operate a pilot study, while a larger governmental agency may operate a program at scale. The paper provides examples of all three threats to scale drawn from workforce development evaluations.

Finally, workforce development programs often rely on a network of partners to implement a program. This leads to a special form of context dependence called "embeddedness." A pilot program is more likely to be more embedded in a local context the more local organizations it depends on for implementation. These partner organizations are unlikely to be found exactly the same in a new context, and, of course, policymakers cannot transport a partner organization like they transport a program model. Thus, when policymakers transport an embedded program to a new location they have to re-create or develop a network of providers that is similar to the network in the original pilot study.

By Alexander Ruder, senior CED adviser

References

Banerjee, Abhijit, Rukmini Banerji, James Berry, Esther Duflo, Harini Kannan, Shobhini Mukerji, Marc Shotland, and Michael Walton. 2017. "From Proof of Concept to Scalable Policies: Challenges and Solutions, with an Application." Journal of Economic Perspectives 31 (4): 73–102.

Gertler, Paul J., Sebastian Martinez, Patrick Premand, Laura B. Rawlings, and Christel M. J. Vermeersch. 2016. Impact Evaluation in Practice. Second Word Bank Group.

Williams, Martin J. 2018. "External Validity and Policy Adaptation: From Impact Evaluation to Policy Design." Working Paper.

_______________________________________

1 Williams (2018) introduces mechanism mapping as a method to improve scale-up efforts, while Gertler et al. (2016) review the importance of following a theory of change in program evaluation more broadly.

2 The distinction between program scale-up and program transport is discussed in Williams (2018).